We all make countless decisions every day when we work. Which of the hundreds of reasonable things in my list will provide the highest value? How am I going to find the best solution for our customers? How will we know if we have even solved the problem?

Meanwhile, with every one of their interactions with you, your customers are constantly sending you signals that can be used to make better decisions. At Easyart we put a lot of effort into basing our decisions on data first, and opinions second. As Jim Barksdale, former CEO of Netscape famously said:

If we have data, let’s look at data. If all we have are opinions, let’s go with mine.

This post is about building better experiences for our customers through research and testing.

Research

Customer research

We must understand our customers in order to solve problems for them.

Daily survey

Our brilliant customer service team phones a small handful of customers and asks them five questions. The answers are recorded in a Google Doc.

Though it’s a small sample size, it provides useful qualitative information which helps us spot patterns in individual customer stories in a way that we could never get from an Analytics funnel or aggregate market report.

Market research

Periodically we conduct large-scale market research which provides us with aggregate information on our customer demographic. These are less frequent but a much higher sample size than our daily surveys.

Google Analytics, KISSmetrics, Crazy Egg and custom reporting

We use Google Analytics (from here on, GA) and KISSmetrics (from here on, KM) extensively to find out how our customers are interacting with our site.

Do and measure

Some things are not A/B testable, we don’t want to clog up the testing queue, or we’re confident enough about our hypothesis that we will accept measuring after it’s launched.

For every significant feature we create a GA annotation so we can do before-and-after analysis to check that everything went as expected. GA and KM provide different vantage points (GA tells you what’s happening. KM tells you who’s doing it), and we also use Crazy Egg for heatmap and scroll tracking.

We track click events on most of the elements we care about, so we can understand how users are using the site.

Funnels

Funnels are ways of modelling a user’s journey through the key parts of your site. We keep a regular eye on these to try to identify under-performing steps that might represent problems or opportunities.

Custom reporting

To find out very specific answers, we’ll run custom reports which might involve data wrangling in SQL or Excel. For example: finding out the average number of line items in our abandoned baskets, understanding the relative performance of the main navigation vs the various search functions or customers’ tendency to frame their print relative to the size of the print.

Testing

UserTesting.com

We use Usertesting.com to conduct remote user interviews. These allow us to observe people using the site as they follow a list of tasks. It gives us the “why” that is difficult to measure through metrics alone, and we predominantly use it to validate new UX ideas, as well as occasional competitor comparisons.

Watch the videos together as a team and share your findings.

It can be extremely humbling to you find out your brilliant idea makes no sense to the user, but it’s better that these lessons are learned together.

Test your iterations to gain confidence

Google Ventures' Jake Knapp puts the emotional journey of user testing perfectly in this post:

First session: “We’re geniuses!” or “We’re idiots!”

Sessions 2–4: “Oh, this is complicated…”

Studies 5-6: “There’s a pattern!”

By running new tests with each of our major iterations we can see quick improvement which usually results in us building enough confidence to launch (though we occasionally reject ideas that don’t test well).

These are not real customers

It should be noted that these are not your customers, nor are they in the same frame of mind that your customers will be, so don’t treat this as customer research.

A/B testing

A/B testing can give us the what to the user testing’s why, by providing a framework to validate a hypothesis.

Much has been written on the topic, and it’s easy to believe it can be used to unearth hidden conversion opportunities that will make you millions. The truth is, not everything is suitable for A/B testing, and there is a fine art to planning tests properly.

Start with a strong hypothesis

Don’t use a scattergun approach, this should be something you are confident about that is backed by data.

Ensure that the hypothesis is testable.

A good A/B testing candidate should be noticeable change, with decent traffic and an expectation for a double-digit improvement to a metric close to the area you are affecting (i.e. don’t expect a change on your category page will have a measurable effect on overall conversion through your checkout).

We usually calculate the test plan using something like experimentcalculator.com

Agree the success metric upfront.

Don’t leave it open to interpretation, don’t let ego get in the way, and be willing to lose. The truth can be humbling.

Use more than one source of data.

We use Optimizely to run the tests, but do most of our analysis through Google Analytics and KISSmetrics custom segments. This allows us to understand how users of each variation are behaving throughout their whole journey.

Go with your gut

We don’t make every decision using data. Sometimes it just isn’t possible, particularly with creative decisions, and in other times there isn’t a lot that can be learned by analysis.

There is never absolute truth in numbers, and it’s best to just go with your instincts and have the confidence to push forward.

Leave a comment

We’re big fans of TDD here at Easyart. So far this has only applied to our Ruby code, but with JavaScript increasingly becoming a bigger part of our technology stack, we needed to start testing that too.

Choosing the right tool

We chose Jasmine as our testing framework, partly because of its similarity to RSPEC, and also because its really easy to get started with.

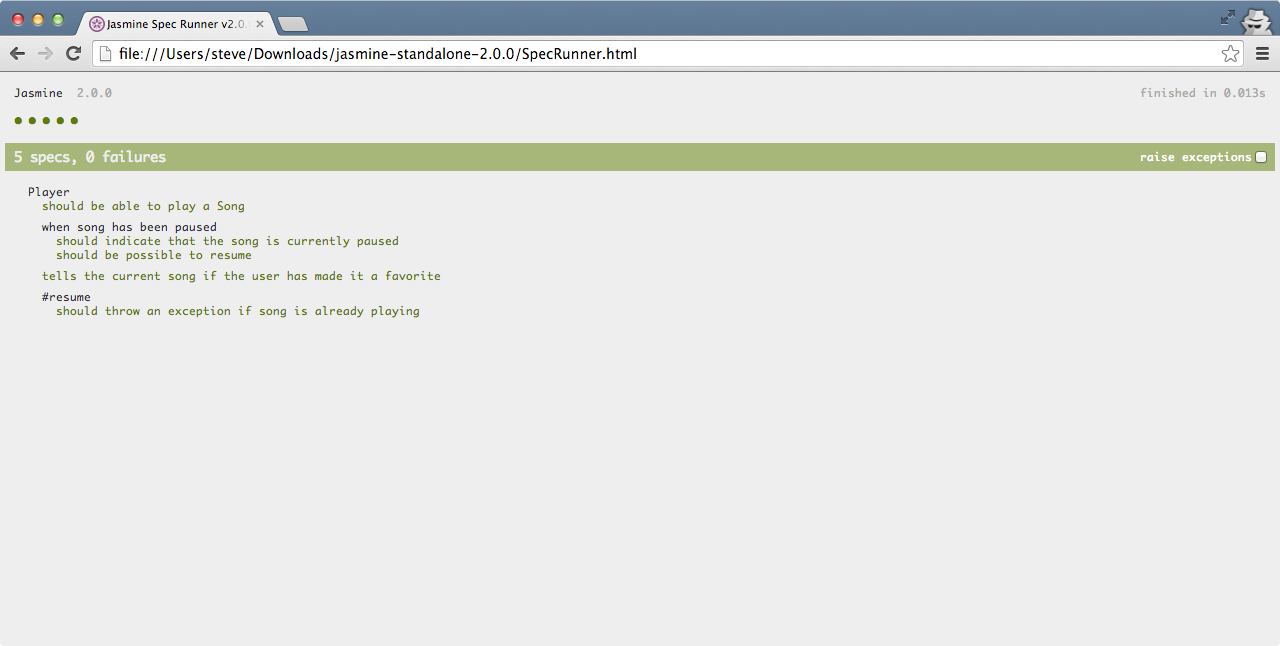

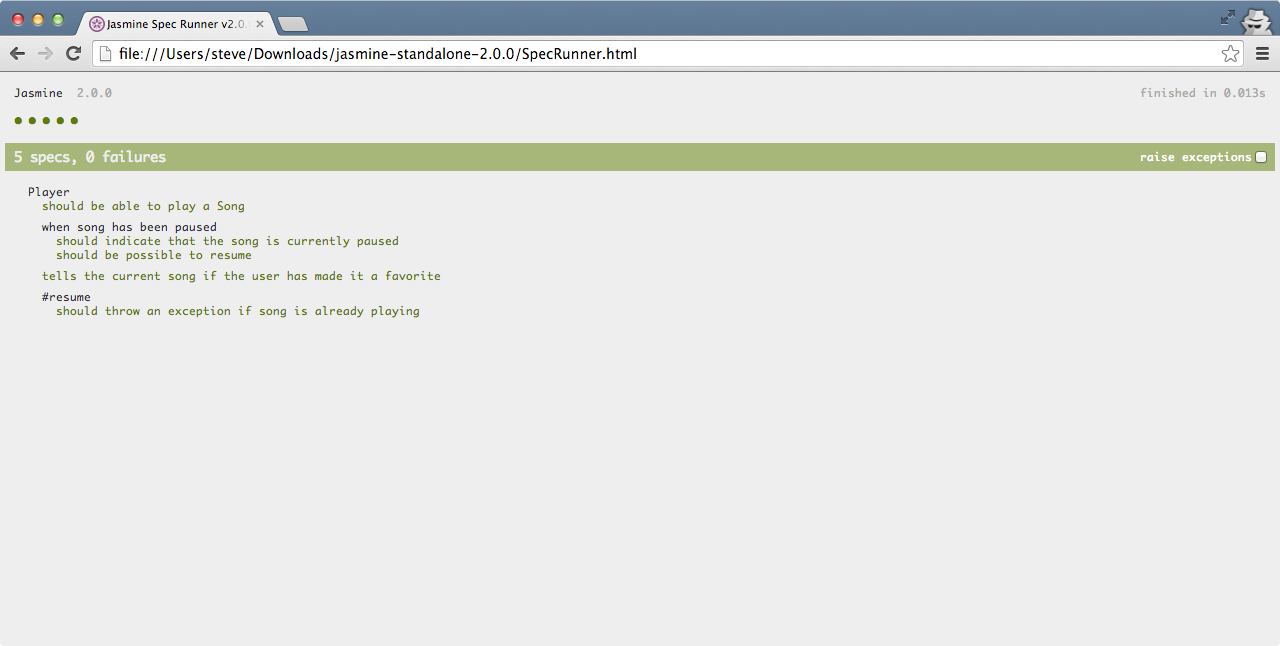

You can get started with Jasmine fairly quickly. Their standalone distribution has everything you need to get up and running. Opening the included SpecRunner.html will run the example tests and show you the results. From here you can swap out the example files with your own.

Getting up and running on Rails

Once we settled on Jasmine, we needed a way to integrate it into our current workflow, as we don’t want to be running tests manually all the time.

Luckily, there is an official Jasmine gem. To add this to your project add the following to your gemfile:

group: development, :test do

gem "jasmine"

end

To setup the file structure that the gem expects for your tests, run the following command from your CLI, which will set up all the necessary files and directory structure to begin testing your code:

Running the tests

There are two ways you can run your Jasmine tests. The first is to run the following rake task:

This will fire up a web server, accessible at localhost:8888 where you will see an HTML document displaying the results of your tests.

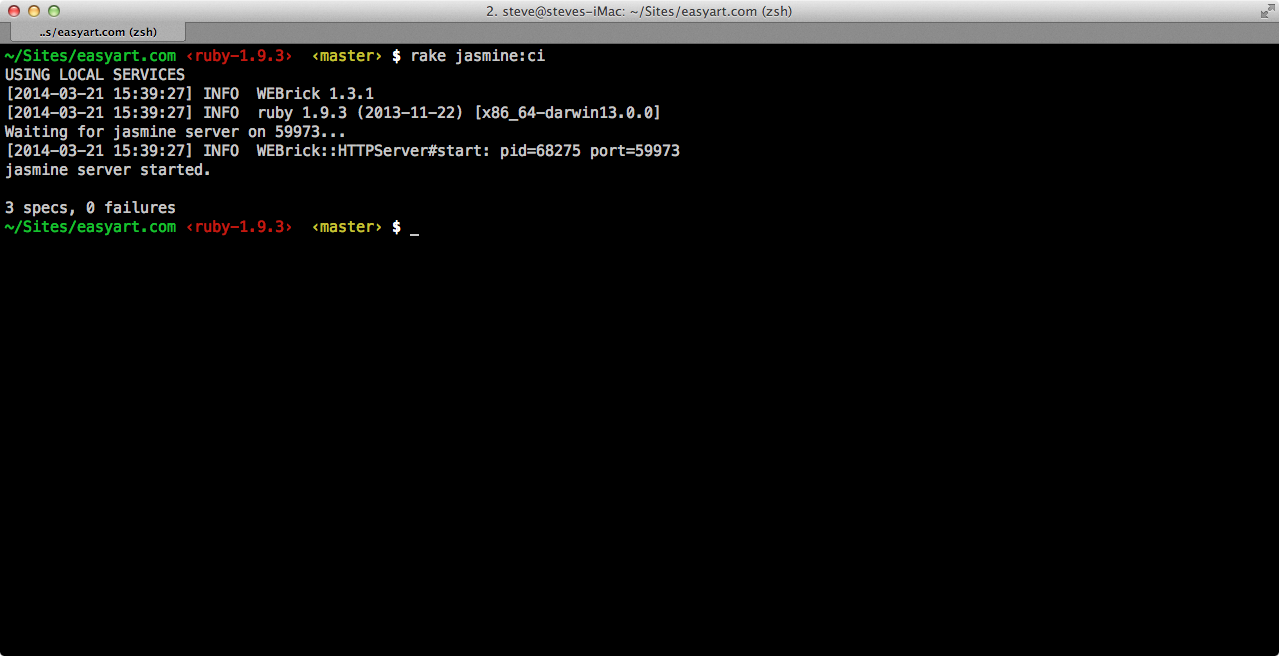

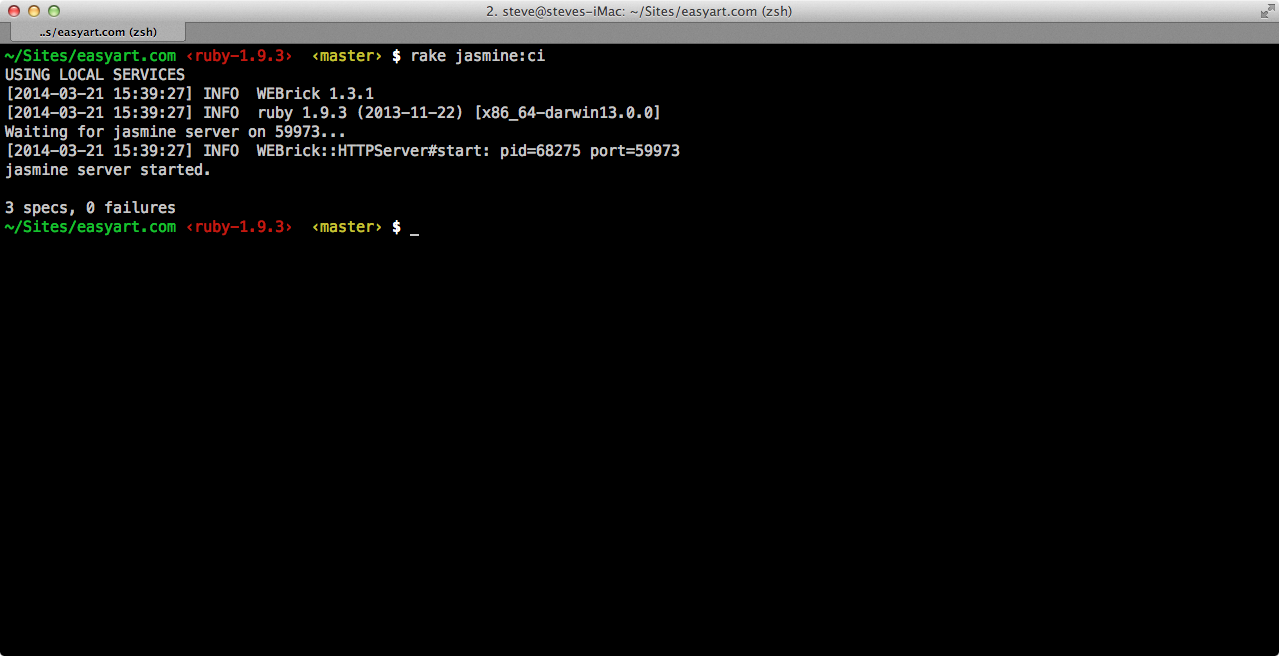

You can also run the tests in continuous integration mode from the command line, which will show you the results in the terminal:

Writing the tests

As I mentioned before, Jasmine is easy to get started with, as long as you are reasonably comfortable with JavaScript, but if like me, you’re new to writing tests it can be hard to figure out where to start.

The key is to write code that is actually testable. The simplest way to do that is to ensure your code returns something. I attended a testing workshop a little over a year ago with Rebecca Murphey, and she described this as:

“Things don’t do things - things announce things”

She also advised to have the following question in mind when writing tests:

“Given this input, do I get this output?”

For more on testable JavaScript, I recommend starting with Rebecca’s A List Apart article.

Going forwards

So far we don’t have as much JavaScript code coverage as we would like, but with a little discipline we’ll eventually get to a place where we can be confident that our code is always working.

Leave a comment

I’ve been struggling to solve a problem for some time now. The agile (with a lower-case “a”) approach we use for software development and the long-term roadmaps we have as a business have always been too disconnected, but I never quite knew how to connect them.

Recently, inspired by a deeper understanding of the lean approaches, I think I’ve finally connected them together in these five steps. It’s still an emerging process, but I’m hopeful it will solve a number of problems.

Keep an inventory of hypotheses

We all have ideas for ways to improve the site. Some of them are bound to make a positive difference, others will make the site worse, and most will have no measurable effect at all.

We keep a spreadsheet of these ideas, documented as hypotheses (if I do x, y will happen), along with as much additional information as we have. This ideas backlog becomes our ammunition to improve the site. Importantly, these are not added to Basecamp, where the tasks live until they have been prioritised.

Establish themes

In order to prioritise the ideas backlog, I work with other managers to decide on a theme for each month. To establish the theme we might look at under-performing parts of the conversion funnel, goals in other parts of the business, tech roadmap etc.

Sometimes the theme might be very specific (increase percentage of framed products sold), or more generic (increase AOV or improve funnel conversion for search, product, cart, order complete).

Rallying the team around a focussed goal helps us dive deeper into interconnected ideas. We spend 60% of our development time working on tasks related to the theme, while the other 40% is reserved for fixing bugs, cost of doing business tasks, documentation, research etc.

Prioritise hypotheses around themes

Once the theme is established, we hold a workshop to prioritise the hypotheses from the backlog in the ways most likely to improve the target KPI.

These competing ideas will vary wildly in difficulty and will probably contradict each another. Using experiment mapping and PIE ranking we can prioritise these based on value.

Create tasks from prioritised hypotheses

Once priorities are established, tasks are added to the “Next up” or “Backlog” lists in Basecamp, along with the corresponding hypothesis. Depending on our level of confidence in the task, we may use a combination of data analysis, user testing and competitor analysis to research the best solution.

Tasks move through a Kanban-esque process through “In Progress” and “QA” through its lifecycle, collecting more and more information as it goes.

Validate hypothesis

Once in “QA”, a combination of user testing and split testing will be used to validate or invalidate our hypothesis.

Minor variations will take a long time to reach statistical confidence, so we only split test meaningful changes. We always agree on the measure of success upfront, but also cross-check side-effects with Analytics segments.

Once a conclusion is reached, the task is marked as complete, links to the task/discussion/tests added to the spreadsheet before it’s moved to a “completed tests” sheet, and annotations added to Analytics for before-and-after analysis.

With each test, we increase our shared understanding of what our customers want, which in turn generates high quality ideas for future testing.

Leave a comment

This is part 2 of a post which tries to explain a method of enhancing an auto-complete search box to act in a similar way to the Google search box.

» Read part one here «

A solution

Something that would work perfectly for this style of query would be to use a regular expression query with a lookahead assertion.

But wait, MySQL doesn’t support look(ahead|behind) assertions!, I hear you say. That’s ok, we’ll do it the hard way, in code.

The query

First we need to mangle the input into a query that will get us most of the way using plain old sql. For example, if the user enters the string pcr (perhaps looking for the print category report), we want to end up with the following:

select * from admin_pages where (

page_description like '%p%' and

page_description like '%c%' and

page_description like '%r%' )

This will return all pages that have every one of those letters in the description in alphabetical order.

- /admin/print_categories.php

- /admin/product_sales_report.php

- /admin/supplier_stocked_item_report.php

But there’s a problem. The ordering of letters matters. We only want pages that contain a p followed by 0 or more letters, then a c followed by 0 or more letters, followed by an r. No matter, this will at least give us something to start with. It’s better than stuffing the entire database table into memory for searching.

The code that constructs this query is below (I’ve removed any security/sanitisation code to avoid cluttering up the example.):

<?php

function page_desc_sql($s) {

return "page_description like '%{$s}%'";

}

// a-z only please...

$query = preg_replace("/[^a-z]/", "", strtolower($_GET["q"]));

// build query

$s = implode(" and ", array_map("page_desc_sql", str_split($query)));

$sql = "

select

page_id,

page_name,

page_description

from

admin_pages

where

({$s})

order by page_description asc

";

?>

So now we have a list of pages ready to process. Let’s create that lookahead expression.

<?php

function page_regex($x) {

return "(?={$x}).*";

}

$regex = "/" . implode(array_map("page_regex", str_split($query))) . "/i";

?>

This sets $regex to the string:

/(?=p).*(?=c).*(?=r).*/i

The final piece of the puzzle is to take the results and pass them through this regex, only returning the ones that match. Then output some json for the auto-complete to use.

<?php

while ($q->next_record()) {

$x = $q->get_row();

if (preg_match($regex, $x["page_description"])) {

$x["page_id"] = (int)$x["page_id"]; // Cast integer for json

$x["url"] = realpath(SECURE_WEB_SERVER_URL) . $x["url"];

$data[] = $x;

}

}

header('Content-type: application/json; charset=iso-8859-1');

echo json_encode($data);

}

?>

Leave a comment

There is something of a revolution underway in the way that we approach user experience at Easyart. With Agile processes so important to the way we build software, we felt we were ready to implement Lean UX approaches to help solve some of our user experience problems. It’s early days but I thought I would share some of the things that have worked for us so far.

Note: I’ve added some notes on this to the tech handbook which is emerging at the moment, but I thought I would elaborate on some of the processes in this post.

Agree on outcomes before deciding on features

By starting with a KPI we want to affect, we can develop hypotheses which we believe will help us reach that goal, and have a clear-cut way of measuring success.

Use personas to guide decisions

Focussing on user needs helps us build a more human experience so we have developed three personas which we can use to step through different on-site journeys.

Shared understanding and hypotheses over design heroes

Getting stakeholders involved in the conception of ideas builds a shared understanding, which is invaluable for collaborating across departments and skill-sets. We’ve had some success with gut test exercises when working on new homepage visual design, group sketching design studio exercises to improve navigation and card sorting to agree on priorities.

Use the live styleguide to build pages from modules

Changing from a page-based approach to a component-based approach allows everyone to sketch pages, and our live style guide is the directory of available modules.

Validate as early as possible

Our opinions are not as important as customer feedback, so we try to validate our hypotheses as early as possible. We use Usertesting.com for user testing, and Optimizely for split testing.

We’re at the formative stages of this process at the moment, but I’m hopeful it’s going to make a big difference.

Leave a comment